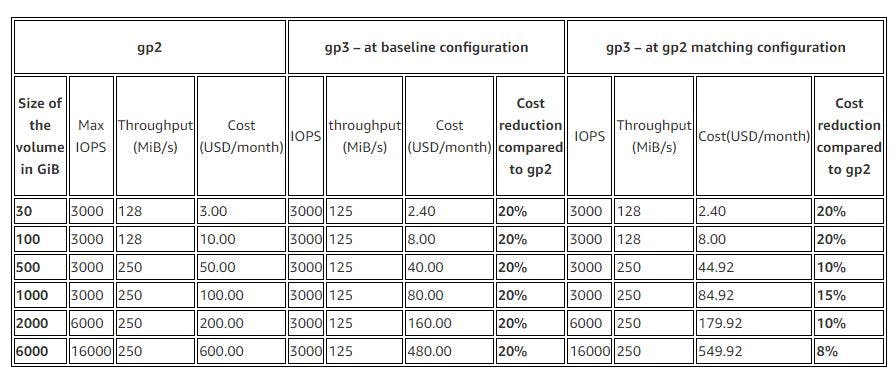

AWS announced their next generation general-purpose SSD volumes in Dec 2020 AKA gp3. AWS still provides Provisioned IOPS-based disks which are costly but had specific use cases to cater to. gp3 has given customers the flexibility to choose IOPS, throughput based on their demands. The other advantage is that by just the switching family type, you can achieve 20% cost reduction in our EBS storage bills with similar or even better performance.

In case gp2 DevOps was bound to use 3x Storage/GB i.e. with 100 GB volume, you get 300 IOPs and 128 MB/s throughput. However, in gp3, decoupled storage performance from capacity, you can easily provision higher IOPS and throughput without the need to provision additional block storage capacity.

gp2 was limited to max 250MB/s while gp3 provides 1,000 MB/s. From IOPs perspective, both can scale up to 16,000 IOPs

Next-generation gp3 volumes also help you scale for a wide variety of applications that require high performance at low cost, including MySQL, Cassandra, virtual desktops, and Hadoop analytics clusters.

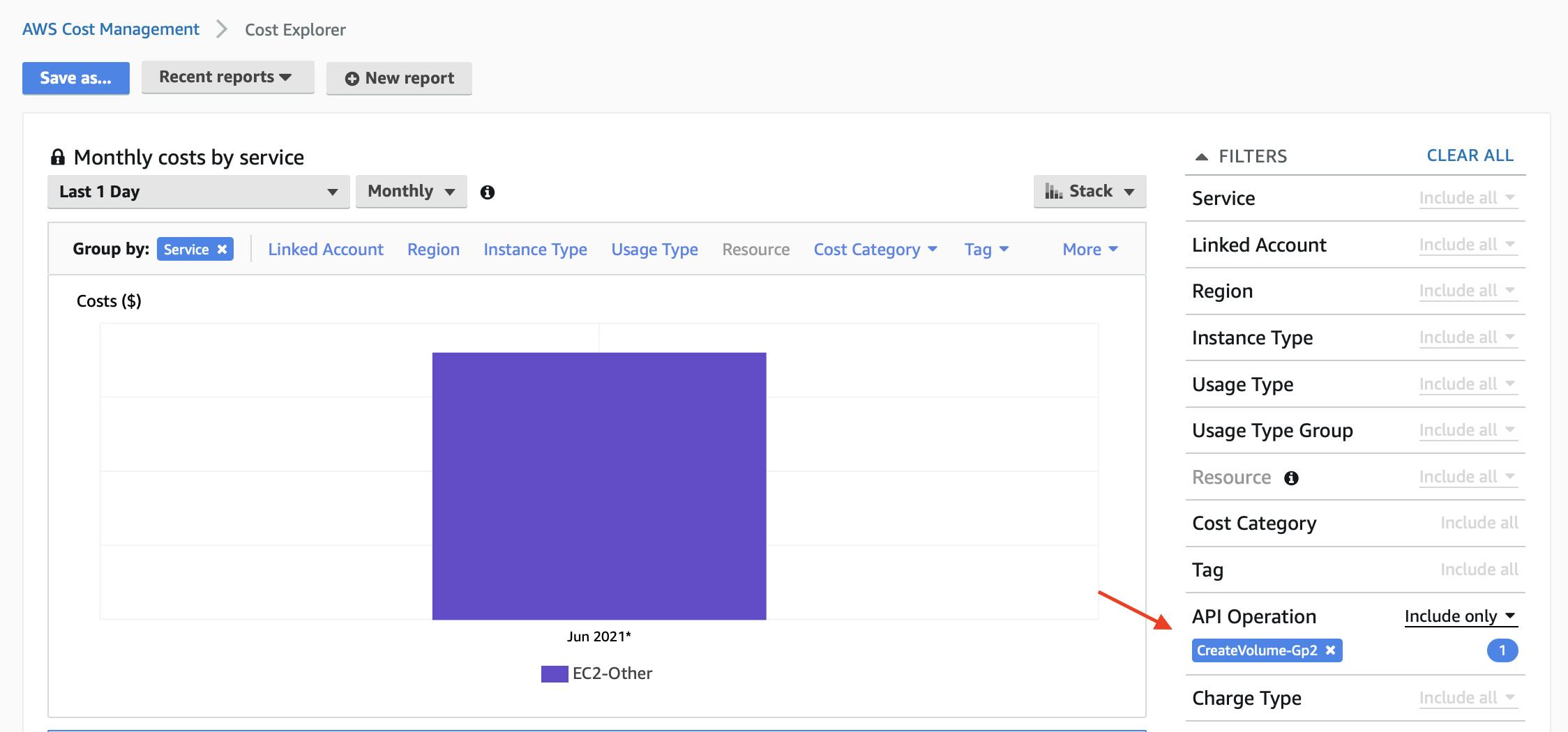

How to check 'gp2' EBS cost in the cost explorer

Go to cost explorer, filter by 'CreateVolume-Gp2' in your API Operations on the right-hand side.

Comparing cost of 'gp2' and 'gp3' in Northern Virginia region

How to migrate from gp2 to gp3

You can now modify your volume type from gp2 to gp3 without detaching volumes or restarting instances. This essentially means that there is no downtime or maintenance window needed to save cost and scale your business.

You can use the following CLI method to convert one more gp2 volumes

aws ec2 modify-volume --volume-type gp3 --iops 4000 --throughput 250 --volume-id vol-xxxx

Note: We have passed IOPs and throughput parameter, trying to find out what the application is using in the past 2 weeks, you might accidentally migrate gp3 to a slow-performing gp3. Though baseline performance should protect you from potential downtime, it is always better to pass these 2 parameters for 100% surety.

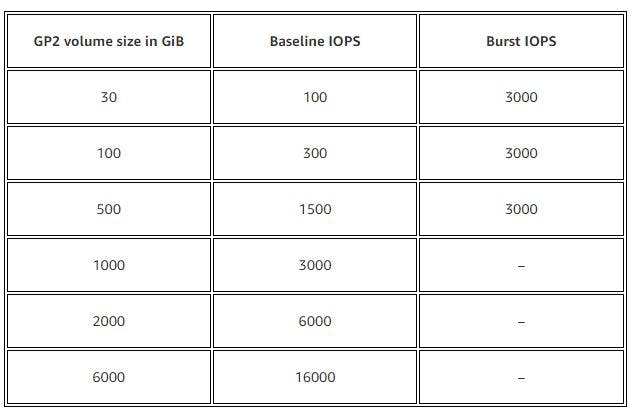

The baseline performance of a gp2 scales linearly, at 3 IOPS per GiB of volume size, with a minimum of 100 IOPS (at 33.33 GiB and below), a maximum of 16,000 IOPS (at 5,334 GiB and above), and also the ability to burst to 3,000 IOPS.

Baseline performance will protect you from provisioning extra

You will get bare minimum performance speed from the following capacity, if your needs are met, don't use extra parameters and you would be fine.

How to monitor the progress of the migration

You can also monitor the progress of the migration using the following CLI command

aws ec2 describe-volumes-modifications --volume-id vol-xxxx

You will following the output to check the progress of your volume.

{

"TargetSize": 100,

"TargetVolumeType": "gp3",

"ModificationState": "modifying",

"VolumeId": "vol-xxxx",

"TargetIops": 4000,

"StartTime": "2021-06-19T20:05:02.865Z",

"Progress": 0,

"OriginalVolumeType": "gp2",

"OriginalIops": 300,

"OriginalSize": 100

}

How to modify using Terraform?

By default, Terraform creates gp2 volumes, you need to pass the volume type to convert them in place. Many terraform assets requires to create and destroy when you change certain types, but not in the case of gp2 to gp3 migration

Sample code to create 10 GB gp2 EBS volume.

resource "aws_ebs_volume" "test-ebs" {

availability_zone = "us-west-2a"

size = 10

tags = {

Name = "Test EBS"

}

}

Sample code to migrate/create 10 GB gp3 EBS volume.

resource "aws_ebs_volume" "test-ebs" {

availability_zone = "us-west-2a"

size = 10

type = "gp3"

tags = {

Name = "Test EBS"

}

}

When you will plan/apply you will see the following change happening in your infrastructure.

# aws_ebs_volume.test-ebs will be updated in-place

~ resource "aws_ebs_volume" "test-ebs" {

id = "vol-xxxxx"

tags = {

"Name" = "Test EBS"

}

~ type = "gp2" -> "gp3"

# (8 unchanged attributes hidden)

}

Plan: 0 to add, 1 to change, 0 to destroy.

There are 2 more parameters we discussed above e.i. iops and throughput. You can use them in your existing terraform code to upgrade them on the fly.

resource "aws_ebs_volume" "test-ebs" {

availability_zone = "us-west-2a"

size = 10

type = "gp3"

iops = "200"

throughput = "250"

tags = {

Name = "Test EBS"

}

}

Output

# aws_ebs_volume.test-ebs will be updated in-place

~ resource "aws_ebs_volume" "test-ebs" {

id = "vol-xxxx"

~ iops = 100 -> 200

tags = {

"Name" = "Test EBS"

}

~ throughput = 0 -> 250

~ type = "gp2" -> "gp3"

# (6 unchanged attributes hidden)

}

Plan: 0 to add, 1 to change, 0 to destroy.

How much time does it takes to convert?

It took 10 seconds to change 1 volume of 10 GB. I haven't tested with large volume and high IOPs in progress to test and benchmark a live application, do feel free to comment if you need so, would be happy to do that benchmarking and share the results.

Output of terraform apply

aws_ebs_volume.test-ebs: Modifying... [id=vol-xxxxx]

aws_ebs_volume.test-ebs: Still modifying... [id=vol-xxxx, 10s elapsed]

aws_ebs_volume.test-ebs: Modifications complete after 14s [id=vol-xxxx]

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

Conclusion

You should move from gp2 to gp3 to save 20% EBS cost and make it more scalable. You can even automate and make it auto-scalable, not just storage but IOPs and Throughput. In Lambdatest we are trying to make the above changes and provide our customers high performing and scalable infrastructure to work upon.

Happy Scaling :)